| : | THE MUSIC COMPUTING LAB |

People

Projects

Publications

Non-Print

PhD Places

Virtual MPhil

Visitors/Interns

News & Events

Jobs

Meetings

Resources

Site Map

Contact

Current Projects

Completed Projects

PROJECTS

Current Projects

EPSRC CHIME Network in Music and Human Computer Interaction

Simon Holland (PI) and Tom Mudd (Co-I, University of Edinburgh) have been awarded a new EPSRC grant to create network in Music and Human Computer Interaction (CHIME - Computer Human Interaction and Music nEtwork) http://chime.ac.uk.Industrial collaborators include Generic Robotic, Playable Technology and TikTok; and charitable collaborators include Drake Music, Special Needs Music, and the Stables Theatre.

Academic co-applicants include: Steve Benford, Nottingham; Alan Blackwell, Cambridge; Carola Boehm, Staffordshire; Lamberto Coccioli, Royal Birmingham Conservatoire; Rebecca Fiebrink, University of the Arts London; Andrew McPherson, Queen Mary; Atau Tanaka, Goldsmiths; Owen Green, Huddersfield; Thor Magnusson, Sussex; Eduardo Miranda, Plymouth; and Paul Stapleton and Maarten van Walstijn, Queen’s Belfast.

The network aims to connect and assist researchers from diverse disciplines, whether just starting or established, whose interests span any combination of Music and Human Computer Interaction (also known as Music Interaction - MI).

CHIME will create a new interdisciplinary Music Interaction community of researchers, industrials and charities to integrate and disseminate knowledge, training and expertise in a wide range of emerging innovative areas through workshops, training, visits and new collaborations.

The project starts in April 2022 and has been awarded £46,929 to carry out this work over the next three years.

Polifonia: A digital harmoniser for Musical Heritage Knowledge

The Polifonia Project 2021-2024 €3,025,435. A digital harmoniser for Musical Heritage Knowledge (€ 484,936 to the OU), EU Horizon 2020. Enrico Daga is Open University PI and Enrico Motta, Paul Mulholland and Simon Holland are Co-Is. The Project involves 10 collaborating partners from Italy, the Netherlands, France and Ireland, and 10 stakeholders including the Bibliothèque Nationale de France and the Stables Theatre in Wavendon. This new project will design, implement and evaluate innovative multimodal haptic and gestural interaction techniques for music, with particular attention to inclusion for diverse disabilities, including profound deafness.Enhancing access and inclusion in musical activities for the hearing and physically impaired (NCACE)

Graeme Surtees (Head of Learning and Participation at the Stables Theatre) and Simon Holland (OU PI) have been awarded a grant funded by NACE National Centre for Academic and Cultural Exchange) for the ENSEMBLE project, with co-applicants Enrico Daga, Paul Mulholland, and Jason Carvalho (KMI); Muriel Swijghuisen Reigersberg (Knowledge Exchange); James Dooley and Manuella Blackburn (Music); and Ursula White, Head of Community Inclusion at the Stables Theatre.This knowledge exchange project will explore ways to use Harmony Space and the Haptic Bracelets (technologies previously developed at the Music Computing Lab) to explore ways to use to enhance inclusion and participation in musical activities at the Stables Theatre.

The project runs from Jan 2022 – June 2022 and has been awarded £2000 by the NCACE, with £41,000 support in kind from the Stables and the Open University.

MakeActive: Haptic Virtual Reality for multi-user, collaborative design at a distance, integrating sighted and visually-impaired users (Innovate UK)

Alistair Barrow of Generic Robotics (PI), Lisa Bowers (OU PI), Simon Holland and Claudette Davis-Bonnick (University of the Arts) have been awarded a new grant for the MakeActive Project.

Technology can routinely provide high-fidelity, real-time, visual, and auditory communication remotely. However, some activities depend implicitly on direct physical touch and feel, e.g. for medicine, teaching, and design. This particularly important for the sight-impaired. The MakeActive Project explores ways to extend existing systems with novel hardware and software to provide haptic and proprioceptive affordances (making use of the senses of touch and body position respectively) to deepen access and inclusion for all, including sight-impaired users. The project combines prototyping of new hardware and software with user trials with sight-impaired users.

The project has been awarded £24,500 by Innovate UK to run this project in first quarter 2022.

HAPPIE

HAPPIE project £998,538, 2019- 2021 (Haptic Authoring Pipeline for the Production of Immersive Experiences) funded by Innovate UK to develop novel haptic interaction technologies, with particular emphasis on technologies to promote inclusion for visually impaired designers, with Lisa Bowers (OU PI), Simon Holland and Janet Van der Linden (Co-I’s) and industrial collaborators including Generic Robotics Ltd, Sliced Bread Animation and Numerion Software.Choosers

Choosers is a prototype algorithmic programming system centred around a new abstraction designed to allow non-programmers access to algorithmic music composition methods. Choosers provides a graphical notation that allows structural elements of key importance in algorithmic composition (such as sequencing, choice, multi-choice, weighting, looping and nesting) to be foregrounded in the notation in a way that is accessible to non-programmers.(Matt Bellingham, Simon Holland, Paul Mulholland)

Song Walker Harmony Space

Song Walker Harmony Space exists as a tangent from the Whole Body Harmony Space project. It is a relatively compact setup, yet still makes full use of bodily movement and gesture. For full up to date information see Harmony Space

Song Walker Harmony Space exists as a tangent from the Whole Body Harmony Space project. It is a relatively compact setup, yet still makes full use of bodily movement and gesture. For full up to date information see Harmony SpaceThe system is suitable for use by 2–4 players simultaneously, with roles and functions being distributed among the players.

(Simon Holland, Anders Bouwer, Mat Dalgleish)

Design and Evaluation of Tangible and Multi-touch Interfaces for Collaborative Music Making

Our research is focused on studying collaboration and musical engagement with tangible and multi-touch interfaces for collaborative music making.

Our research is focused on studying collaboration and musical engagement with tangible and multi-touch interfaces for collaborative music making. Music making tends to be a social and collaborative activity, where communication and coordination between musicians play a key role for a successful collaboration. With the advent of tangible and multi-touch interfaces, novel applications have supported alternative activities compared with those present in single-user PCs. Their physical properties (e.g. size, volume, etc) and affordances, together with a switch towards a more physical interaction with digital data, are some reasons why collaborative co-located actitivies in general, and collaborative real-time music activities in particular, have been exploited on these systems.

We are looking into a formal methodology of evaluation for measuring the level of musical engagement and collaboration using these interfaces (Issues and techniques for collaborative music making), which may help informing the design process of multi-user, co-located, musical prototypes such asTOUCHtr4ck. We are also exploring the use of a theoretical framework of interaction design considerations for collaboration in musical tabletops.

(Robin Laney, Anna Xambó, Chris Dobbyn, Sergi Jordà, Mattia Schirosa)

Music Jacket

The Music Jacket is a system for novice violin players that helps them to learn how to hold their instrument correctly and good bowing action. We work closely with violin teachers and our system is designed to support conventional teaching methods.

The Music Jacket is a system for novice violin players that helps them to learn how to hold their instrument correctly and good bowing action. We work closely with violin teachers and our system is designed to support conventional teaching methods.We use an Animazoo IGS-190 inertial motion capture system to track the position of the violin and the trajectory of the bow. We use vibrotactile feedack to inform the student when either they are holding their violin incorrectly or their bowing trajectory has deviated from the desired path.

We are investigating whether this system motivates students to practice and improves their technique.

(Janet van der Linden, Erwin Schoonderwaldt, Rose Johnson, Jon Bird)@Algorithms

Algorithms to Discover Musical Patterns

Our research involves putting specific aspects of music perception, such as the discovery of certain types of musical patterns, on an algorithmic footing. The resulting algorithms are evaluated by comparison with human experts/listeners performing analogous tasks. We use disparities between algorithm output and human response to shed light on the more nuanced strategies behind human perception of music, thence to improve our models.(Tom Collins, Robin Laney)

Harmony Space

Novice improvisers typically get stuck on ‘noodling’ around individual chords, partly because they are oblivious to larger scale harmonic pathways. Harmony Space enables harmonic relationships to be represented as 2D spatial phenomena such as trajectories. When users move through the space they hear the sounds corresponding to the different locations.

Novice improvisers typically get stuck on ‘noodling’ around individual chords, partly because they are oblivious to larger scale harmonic pathways. Harmony Space enables harmonic relationships to be represented as 2D spatial phenomena such as trajectories. When users move through the space they hear the sounds corresponding to the different locations.Our working hypothesis is that providing tactile guidance around Harmony Space will improve musicians’ knowledge of larger scale harmonic elements such as chord progressions and thereby improve their improvisation skills.

An open research question we are investigating is whether full-body exploration of Harmony Space, for example moving around a large floor projection, brings any benefits over using a mouse and keyboard to interact with Harmony Space on a computer monitor.

(Simon Holland)

Computational Modelling of Tonality Perception

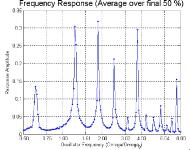

We are building a psychoacoustic model of the feelings of expectation and resolution that are induced by various chord progressions and scales. The model is currently being tested by comparing its predictions to those given by participants in experimental settings.Computational Modelling of Tonality Perception.

We are building a psychoacoustic model of the feelings of expectation and resolution that are induced by various chord progressions and scales. The model is currently being tested by comparing its predictions to those given by participants in experimental settings.Computational Modelling of Tonality Perception.(Andrew Milne, Robin Laney, David Sharp)

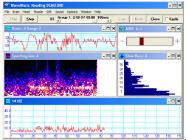

Exploring Computational Models of Rhythm Perception

We are testing rhythm perception models (including Ed Large’s non-linear dynamics model) with rhythms containing two different pulses—such as three against four rhythms found in African-derived music.Exploring Computational Models of Rhythm Perception.

We are testing rhythm perception models (including Ed Large’s non-linear dynamics model) with rhythms containing two different pulses—such as three against four rhythms found in African-derived music.Exploring Computational Models of Rhythm Perception.(Vassilis Angelis, Simon Holland, Martin Clayton, Paul Upton)

Embodied Cognition in Music Interaction Design

In domains such as music, technical understanding of the parameters, processes and interactions involved in defining and analysing the structure of artifacts is often restricted to domain experts. Consequently, music software is often difficult to use for those without such specialised knowledge. The present work explores how the latter problem might be addressed by drawing explicitly on domain–specific iconceptual metaphors /iin the design of user interfaces.Using Embodied Cognition to Improve Music Interaction Design.

In domains such as music, technical understanding of the parameters, processes and interactions involved in defining and analysing the structure of artifacts is often restricted to domain experts. Consequently, music software is often difficult to use for those without such specialised knowledge. The present work explores how the latter problem might be addressed by drawing explicitly on domain–specific iconceptual metaphors /iin the design of user interfaces.Using Embodied Cognition to Improve Music Interaction Design.(Katie Wilkie, Simon Holland, Paul Mulholland).

Use of Multi-touch Surfaces for Microtonal Tunings

Are there ways of laying out, in two dimensions, the tones of Western, non-Western and microtonal scales so as to make them easier to play and understand?

Are there ways of laying out, in two dimensions, the tones of Western, non-Western and microtonal scales so as to make them easier to play and understand?We seek to address this question with a novel multi-touch interface based upon theThummer. It takes advantage of the flexibilities inherent in virtual interfaces to use dynamic transformations such as shears, rotations, and button colour changes. This allows an optimal note layout for a huge variety of microtonal (and familiar) scales to be automatically chosen. We recently presented a prototype at NIME (30 May–1 June, Oslo, Norway)Hex Player—a virtual musical controller.

(Andrew Milne, Anna Xambó, Robin Laney, David Sharp)

Neurophony

Resulting from collaboration with the Eduardo Miranda at the University of Plymouth and visiting intern Fabien Leon, and with kind support of theInterdisciplinary Centre for Computer Music Researchat the University of Plymouth,Neurophony is a brain interface for music, incorporating the Harmony Space software.

Resulting from collaboration with the Eduardo Miranda at the University of Plymouth and visiting intern Fabien Leon, and with kind support of theInterdisciplinary Centre for Computer Music Researchat the University of Plymouth,Neurophony is a brain interface for music, incorporating the Harmony Space software.(Fabien Leon, Eduardo Miranda, Simon Holland)

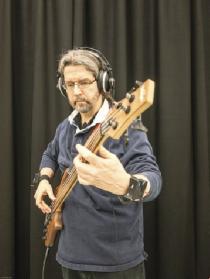

The Haptic Drum Kit

The Haptic Drum Kit consists of four computer-controlled vibrotactile devices, one attached to each wrist and ankle, that generate pulses to help a drummer play rhythmic patterns. One aim is to foster rhythm skills and multi-limb coordination. Another aim is to systematically develop skills in recognizing, identifying, memorizing, analyzing, reproducing and composing monophonic and polyphonic rhythms.

The Haptic Drum Kit consists of four computer-controlled vibrotactile devices, one attached to each wrist and ankle, that generate pulses to help a drummer play rhythmic patterns. One aim is to foster rhythm skills and multi-limb coordination. Another aim is to systematically develop skills in recognizing, identifying, memorizing, analyzing, reproducing and composing monophonic and polyphonic rhythms. A pilot study compared the efficacy of audio and haptic guidance for learning to play polyphonic drum patterns of varying complexity. Although novice drummers can learn intricate drum patterns using haptic guidance alone, the study found that most subjects prefer a combination of audio and haptic signals.

The Haptic Drum kit appears to have possible applications for sufferers from Parkinsons and patients in need of multi-limb rehabilitation. We are exploring possible research partnerships to explore such applications.

The Haptic Drumkit research, including work on the Haptic Ipod /bis being carried out in collaboration with the Music Computing Lab, expert musicologists, drummers and music educators.

(Simon Holland, Anders Bouwer, Mat Dalgleish, Topic Hurtig)

Music and Human Computer Interaction

iHolland, Simon; Wilkie, Katie; Mulholland, Paul and Seago, Allan eds. (2013). Music and Human-Computer Interaction. Springer, London. 292 pages. ISNB 1-4471-2989-X, 978-1-4471-2989-9./i?image hspace="5" align="right" src="Music and HCI reduced.jpg" height=160?

This book, edited by members of the Music Computing Lab, presents state of the art research in Music and Human-Computer Interaction (also known as ‘Music Interaction’). Research discussed covers interactive music systems, digital and virtual musical instruments, theories, methodologies and technologies for Music Interaction. Musical activities covered include composition, performance, improvisation, analysis, live coding, and collaborative music making. Innovative approaches to existing musical activities are explored, as well as tools that make new kinds of musical activity possible. The first book in Springer's new Cultural Computing Series.@HapticiPod

The Haptic Bracelets

The Haptic Bracelets are prototype self-contained wireless bracelets for wrists and ankles, with built-in accelerometers, vibrotactiles and processors. They can work by themselves, in networked synchronised groups, or with smart phones, tablets or computers. The Haptic Bracelets are designed to be worn four at once, one on each wrist and one on each ankle, although any number from one to four may be usefully worn. A pair on ankles or wrists has a rich range of uses. The Haptic Bracelets are interactive devices for both input and output, enabling communication, processing, analysis and logging. They can be used for local or remote tactile communication between two or more wearers. Musical applications include

They can work by themselves, in networked synchronised groups, or with smart phones, tablets or computers. The Haptic Bracelets are designed to be worn four at once, one on each wrist and one on each ankle, although any number from one to four may be usefully worn. A pair on ankles or wrists has a rich range of uses. The Haptic Bracelets are interactive devices for both input and output, enabling communication, processing, analysis and logging. They can be used for local or remote tactile communication between two or more wearers. Musical applications include- Understanding rhythm

- Drumming and rhythm Tuition

- teacher-learner show and feel

- feel explicitly what each limb of drummer does or feel abstracted version of rhyhm

- performance recording and playback

- mental haptic mixer

- slow motion playback

- passive learning

- Compositional understanding

- Inter-Musician Co-ordination

- African Polyrhythm training

- Drumming for the Deaf

(Simon Holland, Mat Dalgleish, Anders Bouwer, Maxime Canelli, Oliver Hoedl)@HapticBracelets